What is map reduce explain how do map and reduce work with a suitable example

So we will be. Step 3 is reduce.

Mapreduce Tutorial Mapreduce Example In Apache Hadoop Edureka

Mapreduce Tutorial Mapreduce Example In Apache Hadoop Edureka

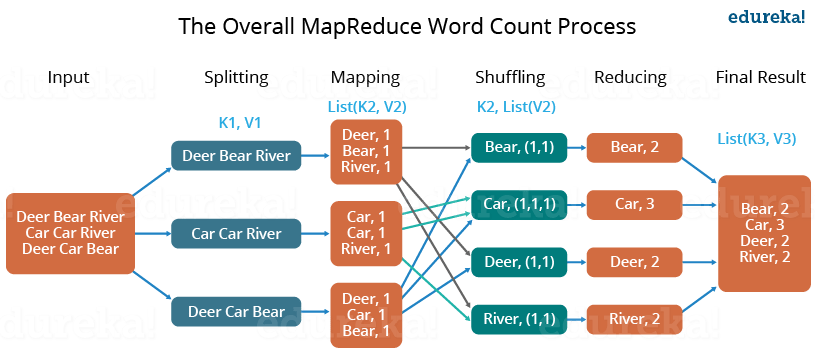

Map takes a set of data and converts it into another set of data where individual elements are broken down into tuples key value pairs.

The map takes a set of data and converts it into another set of data where individual elements are broken down into tuples key value pairs then reduce takes the input from the map and combines those data tuples based on the key and modifies the value of the key. The reduce task executes code written by the user and writes its output to a file that is part of the surrounding distributed file system. The combiner class is used in between the map class and the reduce class to reduce the volume of data transfer between map and reduce. In conclusion we can say that data flow in mapreduce is the combination of map and reduce.

This is the very first phase in the execution of map reduce program. First one is the map stage and the second one is reduce stage. Step 2 is map. Next we will write a mapping function to identify such patterns in our data.

The master is informed of the location and sizes of each of these files and the reduce task for which each is destined. First we will identify the keywords which we are going to map from the data to conclude that its something related to games. Here is a brief summary on how mapreduce combiner works a combiner does not have a predefined interface and it must implement the reducer interface s reduce method. Map reduce architecture consists of mainly two processing stages.

For example it is used for classifiers indexing searching and creation of recommendation engines on e commerce sites flipkart amazon etc it is also used as analytics by several companies. The following mapreduce task diagram shows the combiner phase. In between map and reduce stages intermediate process will take place. Now suppose we have to perform a word count on the sample txt using mapreduce.

Dea r bear river car car river deer car and bear. Hence in this manner a map reduce job is executed over the cluster. Say you are processing a large amount of data and trying to find out what percentage of your user base where talking about games. The reason mapreduce is split between map and reduce is because different parts can easily be done in parallel.

The mapreduce algorithm contains two important tasks namely map and reduce. An input to a mapreduce job is divided into fixed size pieces called input splits input split is a chunk of the input that is consumed by a single map. Map those times into speeds based upon the distance of the meters. Usually the output of the map task is large and the data transferred to the reduce task is high.

A word count example of mapreduce. Let us understand how a mapreduce works by taking an example where i have a text file called example txt whose contents are as follows. In this phase data in each split is passed to a mapping function to produce output values. Secondly reduce task which takes the output from a map as an input and combines those data tuples into a smaller set of tuples.

Map reduce algorithm or flow is highly effective in handling big data. In our example a job of. The intermediate data is going to get stored in local file system. For example get time between two impulses on a pair of pressure meters on the road.

The actual mr process happens in task tracker. For example the. When we see from the features perspective it is a programming model and can be used for large scale distributed model like hadoop hdfs and has the. As the sequence of the name mapreduce implies the reduce task is always performed after the map job.

Intermediate process will do operations like shuffle and sorting of the mapper output data. The above diagram gives an overview of map reduce its features uses. Let us take a simple example and use map reduce to solve a problem. When a reduce task is assigned by the master to a worker process that task is given all the files that form its input.

Let us start with the applications of mapreduce and where is it used. The map task creates a file for each reduce task on the local disk of the worker that executes the map task. Reduce those speeds to an average speed.

Big Data Hadoop Mapreduce Framework Edupristine

Big Data Hadoop Mapreduce Framework Edupristine