What is map reduce technique

It is made of two different tasks map and reduce. Mapreduce is a programming paradigm that enables massive scalability across hundreds or thousands of servers in a hadoop cluster.

What Is Mapreduce How It Works Hadoop Mapreduce Tutorial

What Is Mapreduce How It Works Hadoop Mapreduce Tutorial

As the processing component mapreduce is the heart of apache hadoop.

Mapreduce is a processing technique and a program model for distributed computing based on java. Mapreduce is a programming model for processing large data sets with a parallel distributed algorithm on a cluster source. Traditional enterprise systems normally have a centralized server to store and process data. The map task is done by means of mapper class the reduce task is done by means of reducer class.

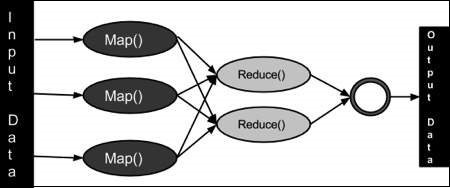

The mapreduce system. Mapreduce program work in two phases namely map and reduce. Mapreduce is a core component of the apache hadoop software framework. Mapper class takes the input tokenizes it maps and sorts it.

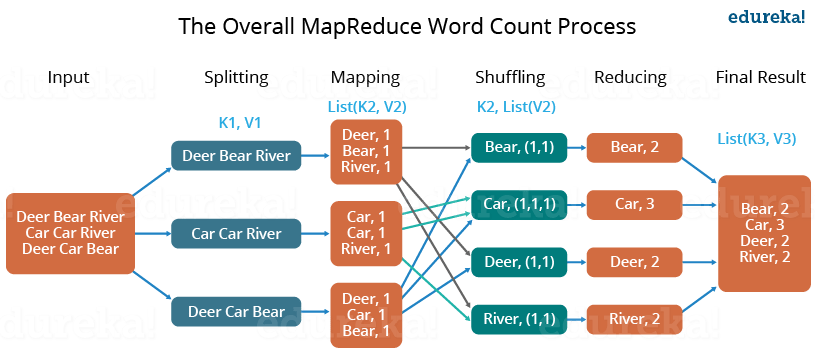

Hadoop enables resilient distributed processing of massive unstructured data sets across commodity computer clusters in which each node of the cluster includes its own storage. A mapreduce program is composed of a map procedure which performs filtering and sorting such as sorting students by first name into queues one queue for each name and a reduce method which performs a summary operation such as counting the number of students in each queue yielding name frequencies. Sorting methods are implemented in the mapper class. Mapreduce is a software framework and programming model used for processing huge amounts of data.

Mapreduce is a programming model and an associated implementation for processing and generating big data sets with a parallel distributed algorithm on a cluster. Mapreduce is a processing technique built on divide and conquer algorithm. Mapreduce implements the sorting algorithm to sort the output key value pairs from mapper by their keys. However big data is not only about scale and volume it also involves one or more of the following aspects velocity variety volume and complexity.

The mapreduce algorithm contains two important tasks namely map and reduce. For example the volume of data facebook or youtube need require it to collect and manage on a daily basis can fall under the category of big data. Map reduce when coupled with hdfs can be used to handle big data. The term mapreduce refers to two separate and distinct tasks that hadoop programs perform.

Map tasks deal with splitting and mapping of data while reduce tasks shuffle and reduce the data. Sorting is one of the basic mapreduce algorithms to process and analyze data. While map breaks different elements into tuples to perform a job reduce collects and combines the output from. Map takes a set of data and converts it into another set of data where individual elements are broken down into tuples key value pairs.

The following illustration depicts a schematic view of a. Mapreduce is exact suitable for sorting large data sets. The mapreduce algorithm contains two important tasks namely map and reduce. The fundamentals of this hdfs mapreduce system which is commonly referred to as hadoop was discussed in our previous article.

Hadoop Mapreduce Tutorialspoint

Hadoop Mapreduce Tutorialspoint

Mapreduce Tutorial Mapreduce Example In Apache Hadoop Edureka

Mapreduce Tutorial Mapreduce Example In Apache Hadoop Edureka

Mapreduce 101 What It Is How To Get Started Talend

Mapreduce 101 What It Is How To Get Started Talend

Map Reduce Technique Flowchart Download Scientific Diagram

0 comments:

Post a Comment